views

At the I/O 2023, Google introduced Project Gameface, its latest innovation. This open-source technology enables users to control a computer’s cursor with head movements and facial gestures, making gaming and other tasks more accessible.

Google teamed with Lance Carr, a quadriplegic video game streamer with muscular dystrophy, to create Project Gameface, inspired by his tale. Lance’s condition affects his muscles, making standard mouse control problematic, so Google set out to create a hands-free option.

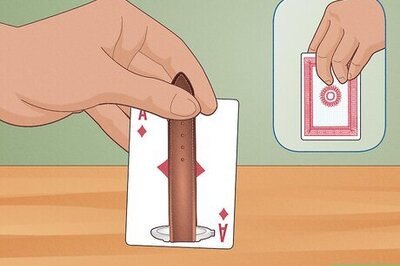

Users may easily navigate their devices by making facial expressions such as raising their brows to click and drag or opening their mouths to move the cursor. This technology is especially advantageous to persons with disabilities, giving them a new way to connect with Android smartphones.

The development of Project Gameface was led by three fundamental principles: giving more ways for individuals with disabilities to utilise gadgets, assuring a cost-effective solution for mass use, and prioritising user-friendliness and customisation.

Google has open-sourced further code for Project Gameface in conjunction with playAbility and Incluzza, allowing developers to incorporate the technology into a variety of applications. For example, Incluzza, an Indian social company that helps persons with disabilities, investigated the use of Project Gameface in educational and work environments, such as composing messages or looking for work.

“We’ve been delighted to see companies like playAbility utilize Project Gameface building blocks, like MediaPipe Blendshapes, in their inclusive software. Now, we’re open-sourcing more code for Project Gameface to help developers build Android applications to make every Android device more accessible. Through the device's camera, it seamlessly tracks facial expressions and head movements, translating them into intuitive and personalized control,” Google said in its blog post.

The blog post further stated that developers may now create applications that allow users to customise their experience by changing face expressions, gesture sizes, cursor speed, and more.

Google has also added a virtual cursor to Android devices, increasing Project Gameface’s capability. The pointer tracks the user’s head movements using MediaPipe’s Face Landmarks Detection API, and facial expressions initiate actions.

Additionally, developers have access to 52 face blend shape values, allowing for a diverse set of functionality and customisation choices. Project Gameface’s overall goal is to increase technology’s inclusivity and accessibility for all users, irrespective of their physical capabilities.

Comments

0 comment