views

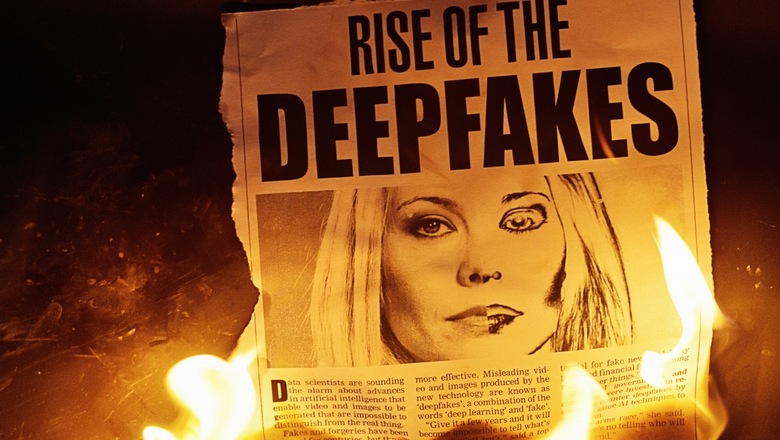

Deepfakes pose a significant threat to both men and women, but women have increasingly been the victims of such malicious content. A deepfake primarily misuses artificial intelligence (AI) and most often targets women to generate non-consensual pornography by manipulating their videos and photos.

Latest examples include the viral deepfakes that targeted actresses Rashmika Mandanna and Alia Bhatt. A recent report suggested that India is one of the most susceptible countries to this emerging digital threat, with celebrities and politicians particularly susceptible to it. But its victims are not limited to famous faces. The more popular and user-friendly AI tools become, the higher the threat of such harm to any woman.

The technology itself doesn’t discriminate based on gender; rather, its misuse tends to reflect societal biases and gender power play. Starting with Photoshop-like tools and now the deepfake technology, women are disproportionately targeted for a number of reasons – misogyny, sexism, objectification, gaslighting.

The impact of deepfake abuse is compounded by the fact that women are often disproportionately targeted. Studies have shown that women are far more likely than men to be the subjects of deepfakes. The disparity highlights the underlying gender biases and inequalities that exist in society and the ways in which technology can be weaponized to perpetuate these harmful norms.

Addressing the issue of deepfake abuse against women or anyone else requires a multifaceted approach — legal, technological, and societal interventions like increasing awareness.

From the legal standpoint, the Centre recently stated that whether in the form of new regulations or part of existing ones, there will be a framework to counter such content on online platforms. The government is currently working with the industry to make sure that such videos are detected before they go viral, and if uploaded online somehow, can be reported as early as possible.

There are some methods and techniques that can help identify deepfakes. These include observing the facial and body movements, inconsistencies in audio and visuals, abnormalities in context or background, and quality discrepancies since deepfakes may exhibit lower quality or resolution in certain areas, especially around the face or edges where the manipulation has occurred.

Apart from these, there are emerging tools and software designed to detect anomalies in images and videos that might indicate manipulation. These tools use AI and machine learning algorithms to spot inconsistencies. Consulting with experts in digital forensics or image and video analysis can also provide valuable insights.

Additionally, awareness plays a crucial role in mitigating the negative impacts of deepfakes. By educating the public about the existence and capabilities of deepfakes, individuals can be empowered to recognize and critically evaluate the content they consume online.

But since the AI platforms where such videos are created and social media platforms where such content is posted play major roles, it is important to ask industry leaders about their own plans and initiatives to address the deepfake challenge. What steps are they taking to detect and remove deepfake content from their platforms? What research are they funding or conducting to develop better detection and prevention technologies? What partnerships are they forming with other stakeholders to address this issue?

By asking these questions and engaging in a dialogue with social media and AI industry leaders, there is a possibility of developing a more effective and comprehensive approach to regulating, detecting, and preventing deepfakes.

Comments

0 comment